🏁 Participate in the Challenge

Thanks for your interest in the Embodied Agent Interface Challenge!

Follow the instructions below to get started with the Final Evaluation Phase of the EAI Challenge, and most importantly, have fun!

📚 Resources

To help you get up to speed and make the most of the EAI Challenge, we have prepared a set of essential resources. We recommend exploring them in the following order for the smoothest experience:

-

📝Tutorial: Step-by-step guide to setting up your environment and understanding the challenge

-

📖Documentation: Complete reference for evaluating and troubleshooting four ability modules

-

🐳Docker Image: Prebuilt environment for running your experiments hassle-free

📦 Submission Preparation

To make participation more accessible to the broader embodied AI community, we have designed a straightforward submission process. You are not required to set up or run the complex BEHAVIOR or VirtualHome simulation environments. All you need to provide is your model’s outputs. We will take care of the rest and perform evaluation internally in a sandboxed environment within our infrastructure.

Starter Kit has been updated with all the essentials to help you get started quickly with the final evaluation phase. The kit includes:

latex_template/: A template for writing your technical report.llm_prompts/: A directory containing all the prompts you will use to query your model.sample_submission/: A sample submission folder that shows the required format and structure for your model’s outputs.starter.ipynb: A notebook to guide you through the process of generating outputs and preparing your submission.

📁 Starter Kit Structure

eai_starter_kit/

├── latex_template/

│ ├── technical_report_template.tex

│ ├── references.bib

│ └── neurips_2025.sty

├── llm_prompts/

│ ├── behavior_action_sequencing_prompts.json

│ ├── behavior_goal_interpretation_prompts.json

│ ├── behavior_subgoal_decomposition_prompts.json

│ ├── behavior_transition_modeling_prompts.json

│ ├── virtualhome_action_sequencing_prompts.json

│ ├── virtualhome_goal_interpretation_prompts.json

│ ├── virtualhome_subgoal_decomposition_prompts.json

│ └── virtualhome_transition_modeling_prompts.json

├── sample_submission/

│ ├── behavior_action_sequencing_outputs.json

│ ├── behavior_goal_interpretation_outputs.json

│ ├── behavior_subgoal_decomposition_outputs.json

│ ├── behavior_transition_modeling_outputs.json

│ ├── virtualhome_action_sequencing_outputs.json

│ ├── virtualhome_goal_interpretation_outputs.json

│ ├── virtualhome_subgoal_decomposition_outputs.json

│ └── virtualhome_transition_modeling_outputs.json

└── starter.ipynb

📤 EvalAI Submission

To submit your model’s outputs for evaluation, please follow these steps:

-

Review the EvalAI documentation: Familiarize yourself with the submission process and officially join the EAI Challenge. While at the same time, please fill out the Participant Information Form to help us better organize the competition.

-

Prepare Your Submission: Organize your model’s outputs according to the structure outlined in the sample_submission in the Starter Kit. Please make sure names of 8 required output files exactly match the expected names as shown in the sample_submission.

-

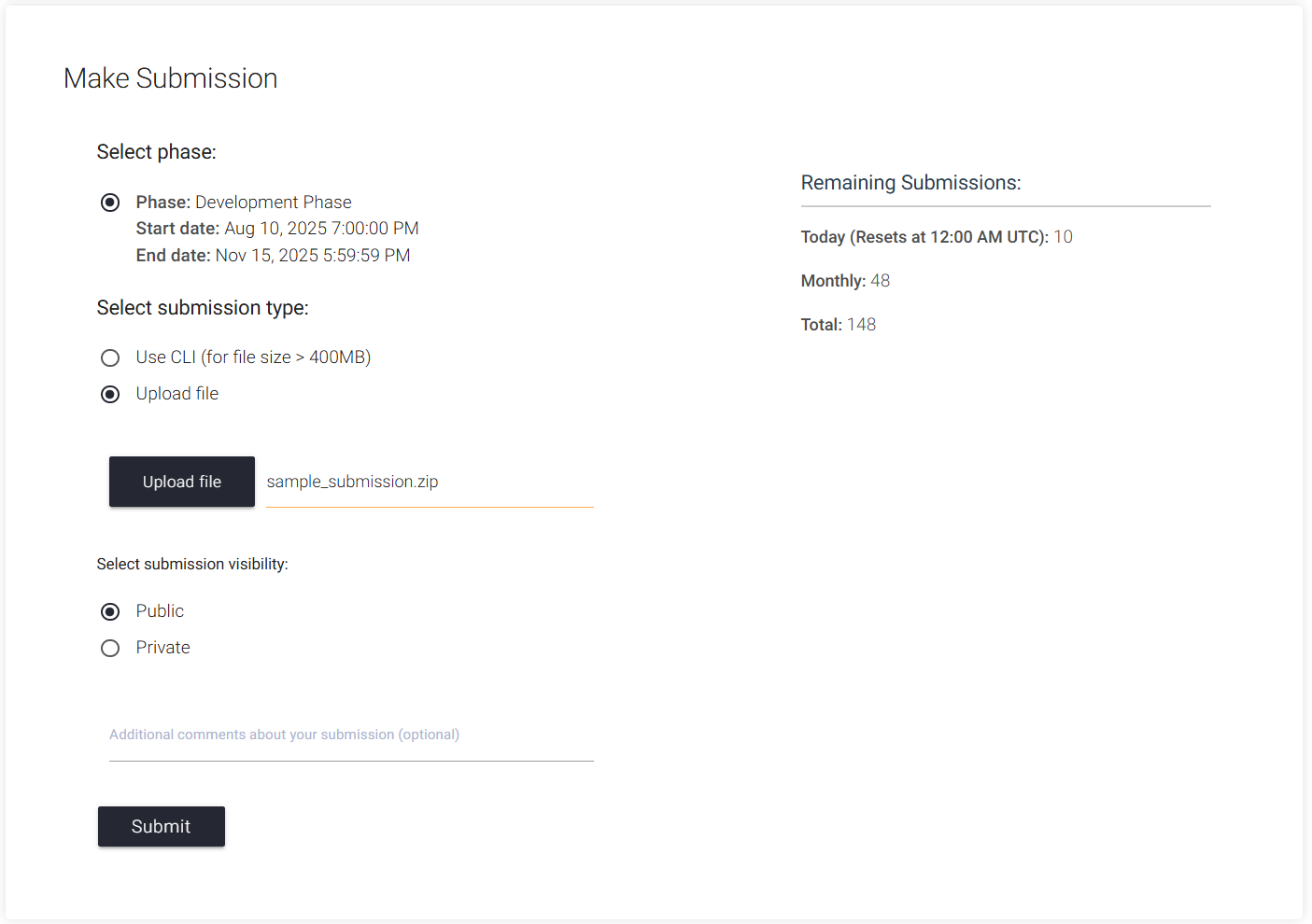

Upload to EvalAI: Use the EvalAI platform to upload your submission. Please zip your submission folder before uploading. The zipped folder should contain all required output files in the correct structure as shown below.

sample_submission.zip/ ├── behavior_action_sequencing_outputs.json ├── behavior_goal_interpretation_outputs.json ├── behavior_subgoal_decomposition_outputs.json ├── behavior_transition_modeling_outputs.json ├── virtualhome_action_sequencing_outputs.json ├── virtualhome_goal_interpretation_outputs.json ├── virtualhome_subgoal_decomposition_outputs.json └── virtualhome_transition_modeling_outputs.jsonFor Mac users, you can use the following command in the terminal to zip your submission folder without including unnecessary hidden files like

.DS_Storeor__MACOSX:zip -r sample_submission.zip sample_submission -x "*.DS_Store" -x "__MACOSX/*"A sample submission should look like this:

-

Monitor Your Submission: After submitting, you can monitor the submission status on My Submissions page and performance of your submission on the Leaderboard. Please note that because the evaluation involves interacting with the simulation environments, it may take 10–30 minutes to receive your results.

-

Submit Your Technical Report: Once you are satisfied with your results, submit your technical report to the OpenReview submission portal before the deadline on December 1, 2025 12:00AM UTC-0. Please follow the instructions in the Technical Report page to prepare your technical report.

We look forward to seeing your innovative solutions in action! If you have any questions or need assistance, don’t hesitate to reach out to us at TianweiBao@u.northwestern.edu or post in our Slack.